AI Ethics & Bias

1. What is AI Ethics & Bias ?

AI Ethics refers to the moral principles and guidelines that govern the development and use of artificial intelligence. Since AI systems impact various aspects of life, including healthcare, finance, employment, and law enforcement, it is crucial to ensure they are fair, transparent, and accountable. Ethical AI development focuses on preventing harm, ensuring privacy, and reducing discrimination, making AI systems more reliable and trustworthy for society.

AI Bias occurs when machine learning models favor certain groups or produce unfair results due to biased training data or flawed algorithms. Bias in AI can lead to discrimination in hiring processes, unfair credit approvals, or biased law enforcement decisions. For example, an AI recruitment tool trained on past hiring data may favor male candidates if historical data shows gender bias. Bias can enter AI systems through data collection, algorithm design, or human prejudices embedded in the system..

To reduce bias and promote ethical AI, developers must use diverse and balanced datasets, ensure transparent decision-making, and regularly audit AI models for fairness. Ethical AI principles also emphasize explainability, meaning AI decisions should be understandable and justifiable. Governments and organizations are introducing AI regulations and ethical guidelines to prevent harmful consequences and promote responsible AI use. By addressing these challenges, AI can become a powerful and fair tool for improving society.

2. Algorithmic Bias

Algorithmic Bias occurs when AI systems produce unfair, prejudiced, or discriminatory results due to biases present in the data, model design, or decision-making processes. These biases can lead to unintended consequences, such as racial or gender discrimination in hiring, loan approvals, or law enforcement. For example, if an AI model is trained on historical hiring data that favors men, it may continue to recommend male candidates over equally qualified female applicants, reinforcing societal biases.

The causes of algorithmic bias can be traced to several factors. Biased training data is a major issue, as AI models learn from historical data that may not be diverse or representative. Flawed algorithm design can also contribute to bias, where AI systems assign more importance to certain attributes, leading to unfair decisions. Additionally, the lack of diversity in AI development teams can result in overlooking biases, as developers may unintentionally embed their own perspectives into the models. AI systems can also reinforce existing prejudices by learning from past decisions that were already influenced by human biases.

To mitigate algorithmic bias, AI developers must focus on using diverse and representative datasets, regularly auditing AI models for bias, and applying fairness-aware algorithms that actively minimize discrimination. Increasing transparency in AI decision-making and encouraging diverse development teams can also help reduce bias. By taking these measures, AI can be made more ethical, fair, and beneficial for all users, ensuring that technology does not reinforce societal inequalities but instead helps to eliminate them.

3. AI Fairness

AI Fairness

refers to the development and deployment of artificial intelligence systems that provide unbiased and equitable outcomes for all users, regardless of gender, race, age, or other demographic factors. Ensuring fairness in AI is essential because biased models can lead to discrimination in areas such as hiring, lending, law enforcement, and healthcare. For example, if an AI-driven hiring tool is trained on past data that favors male candidates, it may unfairly reject female applicants, reinforcing existing inequalities. Fair AI systems aim to eliminate such biases and ensure that decisions made by AI are just and impartial.

Achieving AI fairness involves multiple strategies. One key approach is using diverse and representative training data to ensure that AI models are exposed to a wide range of scenarios and perspectives. Regular audits and fairness tests should be conducted to detect and correct any biases in the model. Developers can also implement bias-mitigation techniques such as re-weighting datasets, adjusting algorithms to promote fairness, and using explainable AI methods to understand and correct potential unfairness. Additionally, human oversight is necessary to ensure AI decisions align with ethical standards and societal values.

Despite these efforts, AI fairness remains a complex challenge due to the difficulty of defining fairness universally. Different cultures and industries may have varying definitions of fairness, making it essential to involve policymakers, ethicists, and diverse stakeholders in AI development. By prioritizing fairness in AI, developers can create systems that foster trust, promote inclusivity, and minimize unintended harm, ensuring that AI benefits society as a whole rather than reinforcing existing disparities.

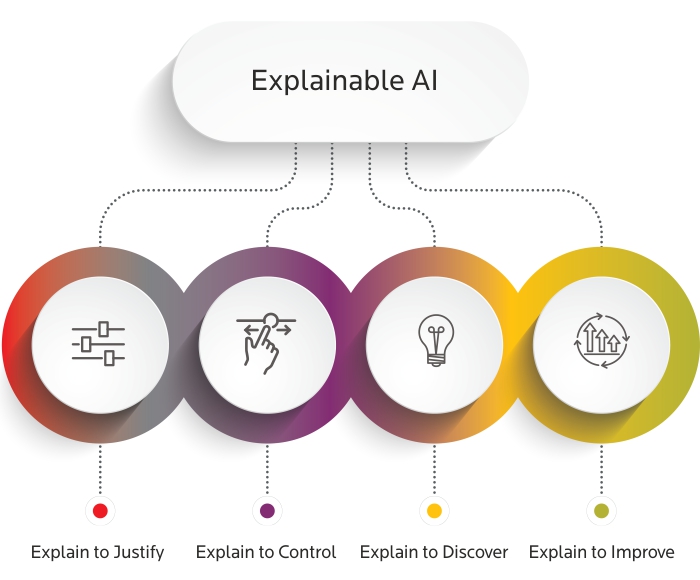

4. Explainable AI

Explainable AI

refers to artificial intelligence systems that provide clear, understandable, and interpretable reasoning for their decisions and predictions. Unlike traditional AI models, which often operate as "black boxes" with complex and opaque decision-making processes, XAI aims to make AI more transparent and accountable. This is particularly important in sensitive fields such as healthcare, finance, and criminal justice, where AI-driven decisions can significantly impact individuals and society. By making AI more interpretable, XAI helps build trust between AI systems and users, ensuring that automated decisions can be reviewed, justified, and improved when necessary.

The development of XAI involves various techniques to make AI models more understandable. One approach is feature importance analysis, which identifies which factors influence a model’s decision the most. Another method is using interpretable models, such as decision trees and linear regression, which allow humans to follow the decision-making logic easily. Additionally, model-agnostic techniques, such as Local Interpretable Model-Agnostic Explanations (LIME) and SHapley Additive exPlanations (SHAP), provide insights into how complex models like deep learning networks arrive at their conclusions. Visualizations, such as heatmaps and attention maps in image processing, also help users see how AI processes information.

DDespite advancements, Explainable AI faces challenges, particularly in balancing transparency with performance. Highly interpretable models may not always be as accurate as complex deep learning models. Additionally, defining what constitutes a "satisfactory" explanation can vary depending on the audience—technical experts may require mathematical justifications, while end-users may need simple and intuitive explanations. Nevertheless, XAI is crucial for ensuring fairness, accountability, and trust in AI systems, making AI-driven decisions more reliable, ethical, and aligned with human values.

5. AI in Decision Making

AI in Decision Making AI plays a crucial role in decision-making by analyzing vast amounts of data, identifying patterns, and providing actionable insights to improve efficiency and accuracy. Traditional decision-making processes rely on human judgment, which can be influenced by biases, emotions, and limited data processing capabilities. In contrast, AI-powered systems can quickly analyze complex datasets, predict outcomes, and recommend the best possible course of action based on historical data and real-time inputs. AI is widely used in industries such as healthcare, finance, supply chain management, and customer service, where data-driven decisions can lead to better outcomes.

One of the key benefits of AI in decision-making is its ability to automate repetitive tasks and provide data-driven recommendations. For example, in the healthcare industry, AI assists doctors in diagnosing diseases by analyzing medical records and imaging data, reducing human errors and improving patient care. In finance, AI-powered algorithms assess credit risk, detect fraudulent transactions, and optimize investment strategies by analyzing market trends. Businesses also use AI for demand forecasting, helping them optimize inventory management and reduce waste. AI-driven decision-making systems leverage machine learning, deep learning, and natural language processing (NLP) to continuously improve their accuracy and effectiveness over time.

Despite its advantages, AI-driven decision-making also raises challenges and ethical concerns. Algorithmic bias, lack of transparency, and over-reliance on AI can lead to unintended consequences. If AI models are trained on biased data, they may reinforce existing inequalities or make unfair recommendations. Additionally, black-box AI models make it difficult for users to understand the reasoning behind AI-generated decisions, reducing trust in AI systems. To address these concerns, organizations must implement Explainable AI (XAI) techniques, ensure diversity in training data, and establish human oversight in critical decision-making processes. As AI continues to evolve, its integration into decision-making will become more sophisticated, enhancing productivity and innovation across various industries.

6. Ethical AI Frameworks

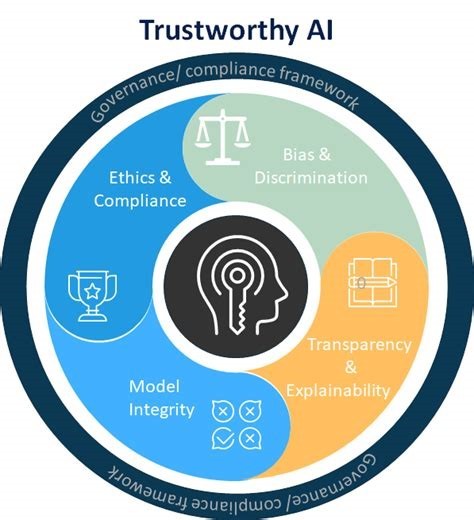

Ethical AI Frameworks

Ethical AI frameworks are structured guidelines that ensure the responsible development and deployment of artificial intelligence systems. These frameworks focus on principles like fairness, transparency, accountability, privacy, and inclusivity to prevent biases and unethical use of AI. Various organizations, governments, and research institutions have proposed ethical AI guidelines to promote trustworthy AI development. For instance, the European Union’s AI Act, the IEEE’s Ethically Aligned Design, and the OECD AI Principles provide standardized approaches to ensure AI systems are fair, explainable, and beneficial to society. These frameworks help businesses and developers align their AI models with ethical and legal requirements, reducing risks associated with bias, discrimination, and privacy violations.

One key aspect of ethical AI frameworks is bias mitigation and fairness. AI models can inherit biases from training data, leading to unfair outcomes, especially in sensitive areas like hiring, lending, and law enforcement. Ethical AI frameworks emphasize bias detection, diverse dataset inclusion, and continuous monitoring to reduce discriminatory effects. Techniques like fairness-aware machine learning and adversarial debiasing help ensure that AI systems make unbiased decisions. Furthermore, regulatory bodies encourage developers to perform impact assessments to evaluate AI's societal implications before deployment.

Another critical element of ethical AI frameworks is explainability and accountability. Many AI models, especially deep learning systems, operate as "black boxes," making it difficult to interpret their decision-making process. Ethical AI frameworks advocate for explainable AI (XAI), which ensures transparency by making AI-generated decisions understandable to humans. Additionally, accountability mechanisms assign responsibility for AI-driven decisions, ensuring that organizations remain liable for their AI applications. By implementing ethical AI frameworks, businesses and governments can build public trust in AI, ensuring that technology serves humanity responsibly and ethically.

7. AI & Privacy Concerns

AI & Privacy Concerns

have become a major issue as artificial intelligence systems increasingly process vast amounts of personal data. AI-powered applications, such as facial recognition, recommendation systems, and digital assistants, often require access to sensitive user information, raising concerns about data security and misuse. The primary risks include unauthorized data collection, lack of user consent, and potential breaches that expose personal information to cyber threats. Additionally, many AI models rely on large datasets that may contain personally identifiable information (PII), making data anonymization and encryption essential to protect user privacy. Governments and organizations worldwide are implementing regulations like the General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA) to ensure AI systems handle user data responsibly.

One significant privacy concern is data surveillance and tracking. AI-powered algorithms, especially in social media and online advertising, analyze user behavior, preferences, and interactions to personalize content and target advertisements. While this improves user experience, it also raises concerns about excessive tracking and manipulation. Companies using AI-driven tracking technologies must ensure transparency, allowing users to control their data and opt-out of invasive monitoring practices. Furthermore, AI-driven surveillance systems used in law enforcement and public spaces, such as facial recognition cameras, pose ethical dilemmas regarding mass surveillance and individual freedoms.

Another critical issue in AI and privacy is data security and breaches. AI models are vulnerable to cyberattacks, including adversarial attacks, model inversion, and data poisoning, which can expose confidential data or manipulate AI predictions. Organizations must implement privacy-preserving AI techniques, such as federated learning, which allows AI models to learn from decentralized data without sharing raw information. Additionally, techniques like differential privacy ensure that AI systems process data while minimizing the risk of identifying individual users. As AI continues to evolve, ensuring privacy protection through robust security measures, ethical guidelines, and strict data policies will be essential to maintaining trust in AI technologies.

8. AI Regulations

AI Regulations are essential for ensuring the ethical, fair, and responsible development of artificial intelligence systems. As AI continues to advance and integrate into various industries, governments and organizations worldwide are establishing legal frameworks to address concerns such as data privacy, bias, accountability, and transparency. Regulations like the General Data Protection Regulation (GDPR) in Europe and the California Consumer Privacy Act (CCPA) in the U.S. set strict guidelines on how AI systems handle personal data, ensuring user consent, transparency, and the right to data deletion. These laws aim to prevent AI misuse and protect individuals from unauthorized data collection, profiling, and discrimination.

Another critical aspect of AI regulations is bias and fairness in algorithms. Many AI models rely on large datasets that may contain biases, leading to unfair treatment in areas such as hiring, lending, and law enforcement. To address this, regulatory bodies are pushing for AI fairness assessments, explainability, and bias detection frameworks. The European Union’s AI Act classifies AI applications into different risk levels, with high-risk AI systems facing strict compliance requirements to ensure fairness and accountability. Additionally, AI models used in healthcare, finance, and legal sectors must adhere to strict guidelines to avoid unethical decision-making and discrimination.

AI regulations also focus on accountability and transparency in AI-driven decisions. Many AI systems operate as "black boxes," making it difficult to understand how they arrive at specific decisions. To tackle this, laws are being introduced to mandate Explainable AI (XAI), ensuring that AI-generated outcomes can be interpreted by humans. Organizations are also required to maintain AI audit trails and provide clear documentation on their AI systems’ decision-making processes. As AI adoption grows, governments and industry leaders continue to refine regulations, ensuring AI remains a trustworthy, ethical, and human-centered technology that benefits society while minimizing risks.

Comments